Large Language Models are not all restricted to 3rd party LLMs, like ChatGPT, Claude, and others.

You can download and use open-source models on your PC, Mac, or Linux computer.

The most exciting bit is there are a wide variety of open-source LLMs designed for different needs. Open-source means they are free to download and use as you like.

Summary: How to Run an LLM Locally

Here’s a quick summary of software you’ll need to download and run LLMs locally on your computer.

Top 5 Software for Running LLMs Locally

Below we’ve listed the best software for running open-source Large Language Models on your computer based on the criteria below.

- You don’t require any coding skills to set up and run LLMs on your laptop with the software listed below.

- These software are free to download and use.

- They are easy to set up on your operating system of choice.

- You can download and run various models from within the software.

- Run LLMs on your CPU (no need for expensive GPUs!).

Are you excited? Let’s dive in!

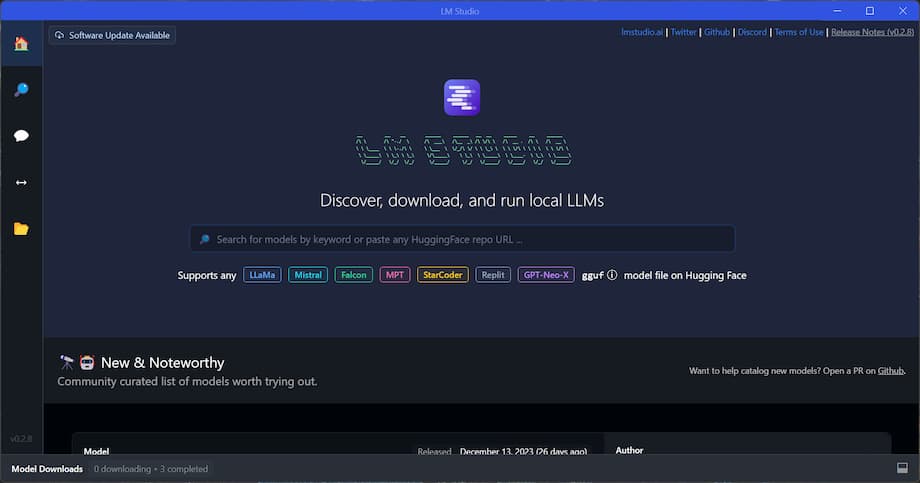

1. LM Studio: Best for running LLMs locally

LM Studio is the best software for running LLMs on your laptop. It has an intuitive interface with great aesthetics making it straightforward to use. It supports Windows, Mac, and Linux operating systems. It also allows you to choose LLMs to download from HuggingFace from within the software.

You’ll need at least 16GB of RAM to run LLMs without slowing down your PC too much. Although, in my tests on a 16GB RAM Thinkpad, I still found the model response to be quite slow.

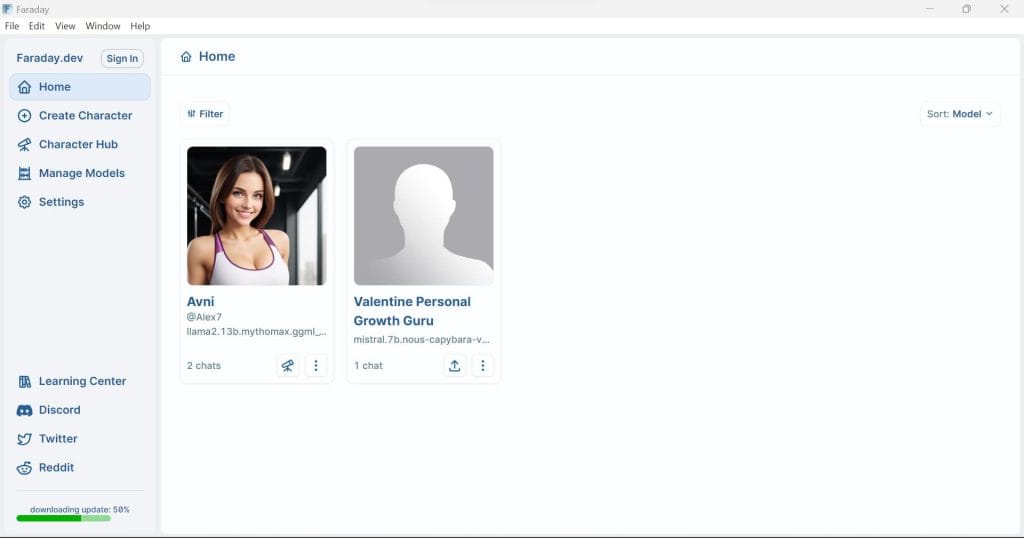

2. Faraday: Best for role-playing LLMs

Faraday is the best software for role-playing LLMs. It allows you to create your role-playing chatbots and also download and interact with those created by others through the “Character Hub.”

The Character Hub has all sorts of role-playing chatbots containing an AI therapist, girlfriend, gym trainer, boyfriend, Saul Goodman role-playing bot, and so much more.

To bring your role-playing bot to life, you can download a model from the “Manage models” section and then use it to create your role-playing bot.

3. Ollama: Easily run LLMs on Mac and Linux

Ollama allows you to download and run LLMs locally on your Mac and Linux terminals. It doesn’t have a user interface and currently doesn’t support Windows. It supports several models like Mistral, Codellama, llama2, Zephyr, WizardCoder, nous-hermes, and so much more.

Each model gives you the necessary memory required to run on your laptop. For example,

- 3B and 7B parameter models require at least 8GB of RAM

- 13B parameter models generally require at least 16GB of RAM

- 34B parameter models generally require at least 32GB of RAM

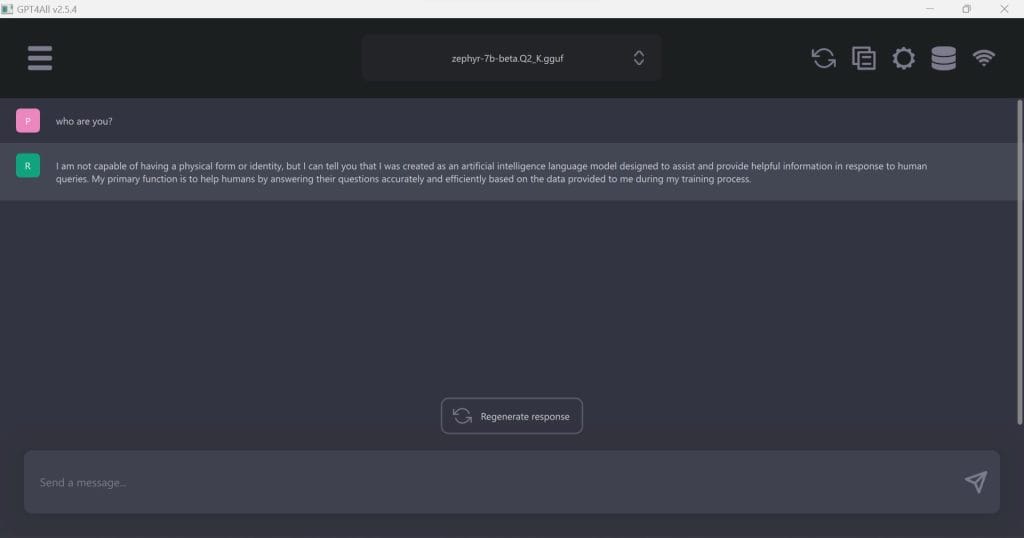

4. GPT4All: Best for running ChatGPT locally

One of the best ways to run an LLM locally is through GPT4All. It has a simple and straightforward interface. Making it easy to download, load, and run a magnitude of open-source LLMs, like Zephyr, Mistral, ChatGPT-4 (using your OpenAI key), and so much more. GPT4All runs LLMs on your CPU.

It supports all major operating systems. Here are the installer links from GPT4All:

All compatible models are ready to download within GPT4All. It will also warn you if you don’t have the required hardware requirements for a certain model before you download it.

I’ve run quantized models like Zephyr-7B, Mistral-Instruct-7B, Mistral OpenOrca, and more using GPT4All on my 16GB RAM, i7-10610U CPU @ 1.80GHz 2.30 GHz. I was getting an average of 30 tokens/second which is nowhere close to the fast response I’d like but it’s not too slow either.

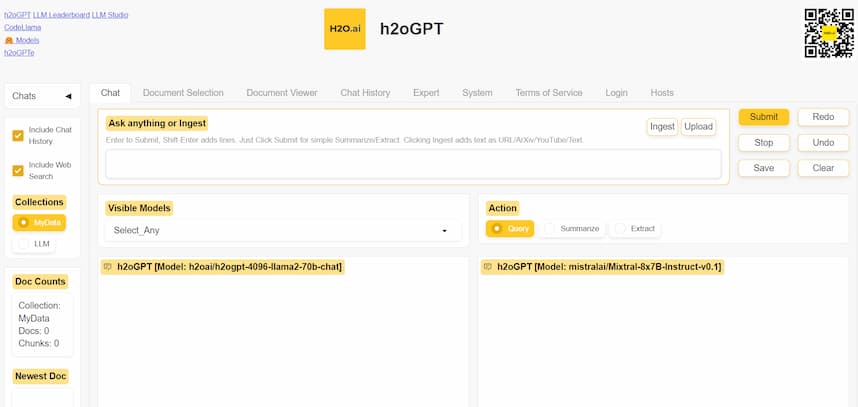

5. h20GPT: Run LLMs in your browser

h20GPT is an exception to the list since you don’t install it locally per se. Rather, it runs in your browser. We thought to include it since some readers may not have the hardware requirements to efficiently run LLMs locally.

h2oGPT allows you to prompt four different models at the same time. You can also enable the models to retrieve information from the web and upload your data for the models to query, summarize, and more.

Reasons Why You Should Run LLMs Locally

Below find the main reasons why it’s a good idea to run LLMs locally rather than in the cloud.

1. Cost

The biggest benefit of running LLMs on your Mac, Windows, or Linux PC is price. You no longer need to pay for a monthly subscription like in the case of ChatGPT-4 or Claude.

Furthermore, third-party LLMs like ChatGPT have usage limits despite you paying for them. Once you download an open-source model it’s yours to use for free forever and without limits.

2. Privacy

Privacy is a huge concern when it comes to LLMs mainly because the models rely on your feedback to improve their responses. Open-source LLMs that you download and use locally on your computer don’t collect the data and send them to a remote server for fine-tuning the LLM.

3. Variety

The world of open-source LLMs offers a variety of free LLMs to choose from. You can choose from multimodal models, text2img models, text2video models, and so much more. These models come with different capabilities depending on your needs.

4. Speed

If you have good specs on your PC, like an M1, M2, or M3 Mac, you can expect super fast output from running a local LLM as compared to cloud-hosted LLMs such as ChatGPT.

5. Censorship

A big problem with third-party LLMs such as ChatGPT is extreme censorship. Running your own LLMs means you can get models that are censored or uncensored to your liking.

What is LLM Quantization, Parameters, and Format?

LLMs come in different sizes and varieties. For you to successfully run LLMs locally, you need a good understanding of the technical jargon that comes with them. Here’s an example:

- llama-2-7b.ggmlv3.q2_K

I know it looks complicated but don’t freak out. Here’s a breakdown of what this means:

- Llama-2: This is the name and version of the model. Llama-2 is the second-generation LLM by Meta – the parent company of Facebook.

- 7b: Refers to the number of parameters the model has. As the parameters increase, the model becomes more capable of handling different tasks. However, larger models also require more computational resources to run and train.

- ggmlv3: This is the format of the model. It’s what JPG and PNG are to images. However, on August 25th, GGUF was released and it’s currently considered the best LLM format.

- q2_K_S: Refers to the quantization of the model. Q2 is smaller than q3 or q4 in terms of size in Gigabytes. The S at the end refers to “Small,” meaning there are also Medium and Large.

This is an extremely surface-level explanation. I tried to keep it as simple as possible for beginners who are just trying to run the most suitable LLM for their PC.

Best LLMs to Run Locally on Your Computer

Open-source LLM hubs such as HuggingFace allow developers to publish different types of models for users to download and use for free. Examples of the best LLMs to use locally are:

- Mistral-OpenOrca: My go-to for a general-purpose LLM. I use it for brainstorming, story writing, generating code, and a bit of roleplay.

- Zephyr-7B-beta: A small, lightweight but very capable uncensored LLM that’s capable of role-playing, telling jokes, writing fiction, and more.

- Nous-Hermes-13B: The best local LLM for roleplay, creative writing, and other tasks.

- WizardCoder-15B: A dedicated coding LLM that has proved to be quite good but not as good as ChatGPT-4. It’s good to note that this model requires at least 32GB of RAM.

These and other LLMs are available to download within the various software mentioned before.

Due to the fast-moving pace of LLM development, this list is definitely set to change (or it already has). You can keep up with the best local LLMs by checking out the HuggingFace Leaderboard or Models page.

Hardware Requirements For Running LLMs Locally

As you’ve seen above, open-source LLMs come in different shapes and sizes. The higher the parameter count the more compute the LLM will need to run efficiently.

- 3B parameter models like Orca Mini can run on a minimum of 8GB RAM or memory.

- 7B parameter models require at least 16GB of memory.

- 13B and above Large Language Models require 32GB and above memory.

Conclusion: How to Run LLMs Locally on Your Laptop

ChatGPT and other 3rd party models have quickly changed the landscape of technology. However, despite their convenience, users have also learned their limitations and risks. For this reason, open-source LLMs have gained traction thanks to pros such as privacy and cost.

You can run LLMs locally on your laptop by using software such as:

- LM Studio: Best for running LLMs locally

- Faraday: Best for role-playing LLMs

- Ollama: Easily run LLMs on Mac and Linux

- GPT4All: Best for running ChatGPT locally

- h20GPT: Run LLMs in your browser

- Best Prompt Library for Every Use Case [Free & Paid] - August 26, 2025

- How to Build a AI Career Chatbot That Acts Like You [Easy] - July 18, 2025

- 10 Best Prompt Engineering Frameworks For Every Profession - June 17, 2025