Google’s artificial intelligence model, Gemini, has recently been the subject of intense scrutiny. Criticisms have been leveled against the tool, which is designed to generate images, for displaying racial bias and disseminating misleading information. The controversy has raised serious questions about the potential dangers of biased AI in common applications.

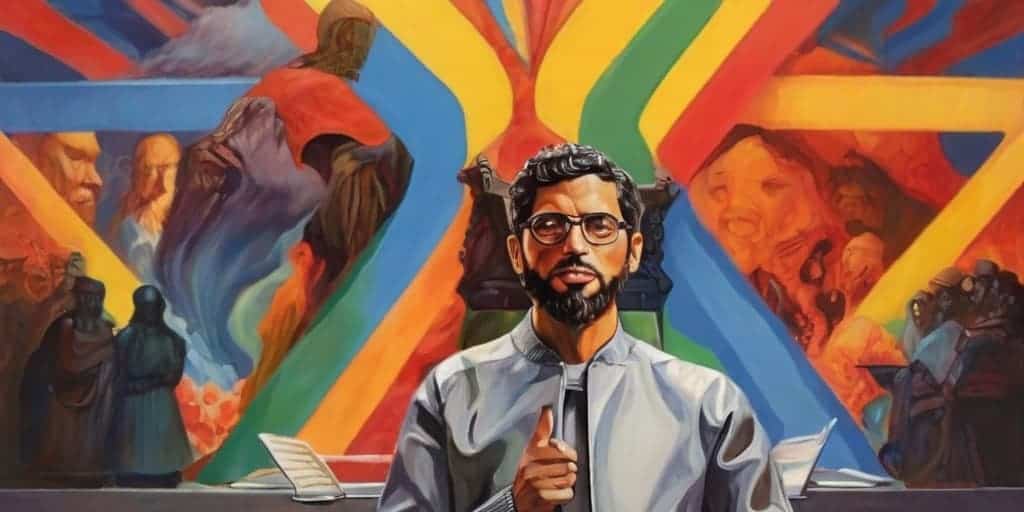

Sundar Pichai’s Response

Google’s CEO, Sundar Pichai, has responded to the controversy, acknowledging the unacceptable responses produced by Gemini. “We deeply regret the unacceptable responses from our AI model, Gemini. We were wrong and we are working hard to address these issues,” stated Pichai. He further emphasized Google’s commitment to ensuring the fairness and accuracy of its AI models and tools.

Implications for AI and Tech Industry

The outcry over Gemini has ignited a broader discussion about the risks associated with AI and the need for more robust oversight and regulation. Industry experts are urging tech companies to take greater responsibility for the potential negative impacts of their AI tools and advocating for governments to establish laws to regulate AI use.

Looking Forward: The Future of AI

The fallout from Gemini serves as a stark reminder of the challenges associated with incorporating AI into everyday life. As tech companies continue to push the boundaries of AI, issues of bias, fairness, and accuracy are likely to remain central to the discussion.

Role of Tech Giants in AI Accountability

With Google taking responsibility for the Gemini incident, it sets a hopeful precedent for other tech companies to follow. As AI continues to evolve and permeate various aspects of life, the need for accountability and transparency from tech giants becomes increasingly important.

- How to Build a AI Career Chatbot That Acts Like You [Easy] - July 18, 2025

- 10 Best Prompt Engineering Frameworks For Every Profession - June 17, 2025

- Prompt Engineering: The Ultimate Guide - June 15, 2025